Once an internal project from Google, Kubernetes has changed the way software development is done these days. A white steering wheel on a blue background seems to be everywhere at this moment. The business wants to grow and pay less, DevOps want a stable platform that can run applications at scale, developers want reliable and reproducible flows to write, test and debug code. Kubernetes promises it all. Now that Jelastic PaaS offers managed Kubernetes, spinning up a cluster can’t be easier.

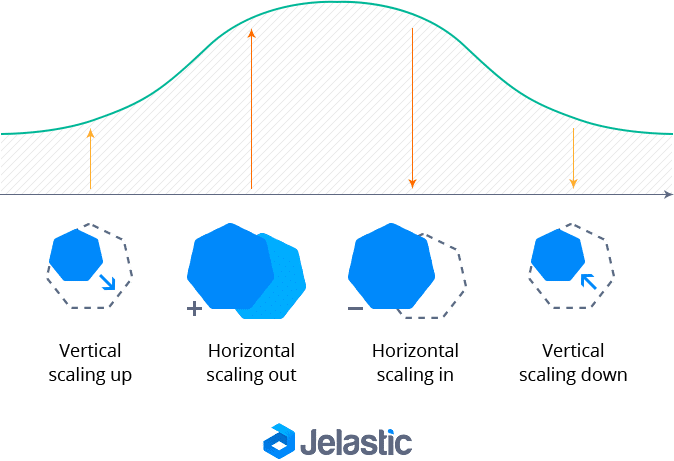

However, have you given some thoughts on how to get such a powerful container orchestration platform and pay just for resources that you actually need? This article will shed some light on horizontal and vertical scaling both on Jelastic PaaS (infrastructure) and Kubernetes (application) level.

Scaling Kubernetes on Infrastructure Level

A Kubernetes cluster typically consists of a master (or a couple of them) and multiple nodes where application pods are scheduled. The maths is quite simple here: the more applications you run in your cluster, the more resources (nodes) you need. Say, you have a microservices application consisting of 3 services, each started as an individual pod which requests 1 GB of RAM. It means that you will need a 4 GB node (Kubernetes components and OS will require some RAM too). What if you need additional RAM in case of high load, potential memory leaks or if you deploy more services to the cluster? Correct, you either need a larger node or add an additional node to the cluster. Usually in both cases, you will pay for the exact amount of resources that come with a VM (i.e., you will pay for, say, 3 GB of RAM even if half of it is unused). That’s not the case with Jelastic PaaS though.

Vertical Scaling of Kubernetes Nodes

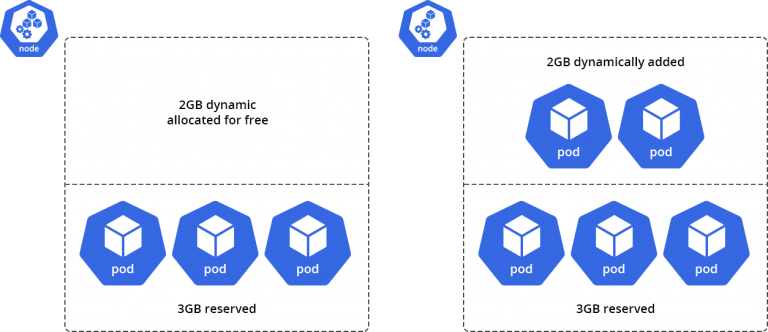

Let’s get back to maths. If the application roughly needs 3 GB of RAM, and there’s not much going on in the cluster, you need just one node. Sure thing, having some extra free RAM is always a good idea, so a 5 GB node makes a lot of sense.

Again, not with Jelastic PaaS. What you can do is request a 3 GB node and have 2 GB in stash. When your application (Kubernetes pod) starts consuming more (which is configured on the Kubernetes side too) or you simply deploy more pods (as in the chart below), those 2 extra GB RAM become immediately available, and you start paying for those resources only when they are used.

As a result, you can do some simple maths and figure out the best cluster topology: say, 3 nodes, with 4 GB of reserved RAM and 3 GB of dynamic RAM resources.

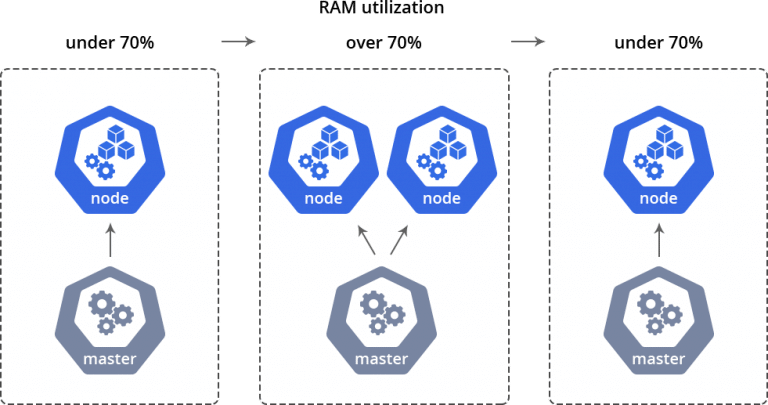

Horizontal Auto-Scaling of Kubernetes Nodes

Having one huge node in a Kubernetes cluster is not a good idea since all deployments will be affected in case of an outage or any other major incident. Having several nodes in a stand-by mode is not cost efficient. Is it possible that Kubernetes adds a node when it needs it? Yes, a Kubernetes cluster in Jelastic PaaS can be configured with horizontal node auto-scaling. New nodes will be added to a cluster when RAM, CPU, I/O or Disk usage reaches certain levels. Needless to say, you get billed for additional resources only when they are used. Newly added nodes will be created according to a current topology, i.e., existing vertical scaling configurations will be applied. Jelastic PaaS will scale down as soon as the resource consumption gets back to expected levels. Your Kubernetes cluster will not starve, yet you will not pay for unused resources.

Scaling Kubernetes on Application Level

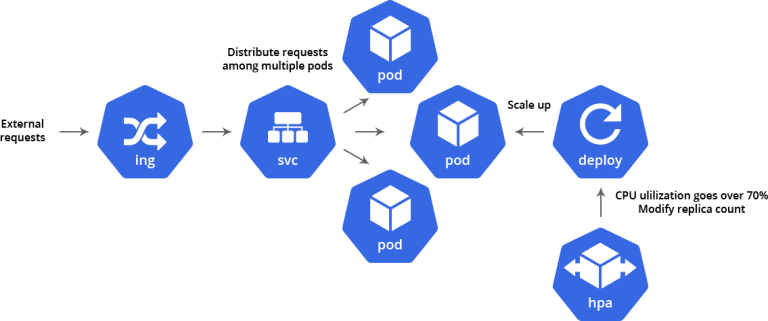

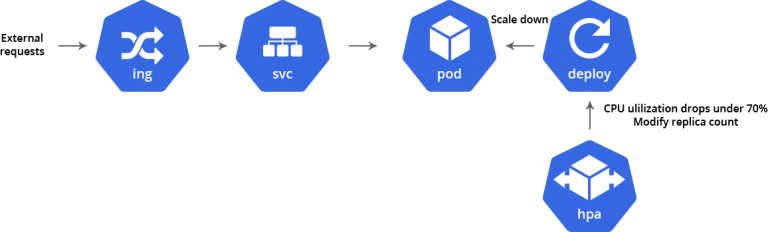

Kubernetes has its own horizontal pod auto-scalers (HPA). In simple words, HPA will replicate chosen deployments based on the utilization of CPU. If the CPU consumption of all pods grows more than, say, 70%, HPA will schedule more pods, and when the CPU consumption gets back to normal, deployment is scaled back to the original number of replicas.

Why is this cool and how does it work with automatic horizontal scaling of Kubernetes nodes? Say, you have one node with a couple of running pods. All of a sudden, a particular service in the pod starts getting a lot of requests and performing some CPU costly operations. RAM utilization does not grow, and as a result at this point, there is no mechanism to scale the application which will soon become unresponsive. Kubernetes HPA will scale up pods, and an internal Kubernetes load balancer will redirect requests to healthy pods. Those new pods will require more resources, and this is where Jelastic PaaS horizontal and vertical scaling comes into play. New pods will be either placed on the same node and utilize dynamic RAM, or a new node will be added (in case there’s not enough resources on existing ones).

Kubernetes Scaling Out:

Kubernetes Scaling In:

On top of that, you may set resource caps on Kubernetes pods. For example, if you know for sure that a particular service should not consume more than 1 GB RAM, and there’s a memory leak if it does, you instruct Kubernetes to kill the pod when RAM utilization reaches 1 GB. A new pod will start automatically. This gives you control over resources your Kubernetes deployments utilize.

Living Proof with WordPress Hosted in Kubernetes

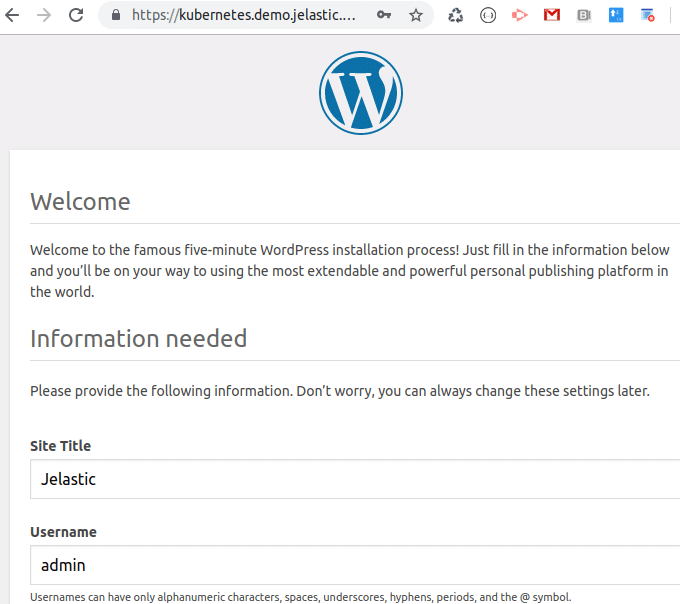

Now, let’s deploy a real-life application to a Kubernetes cluster to show all of the above mentioned scaling features. A WordPress website would be a great example. The huge Kubernetes community is one of its biggest advantages and adoption factors, so it’s really easy to find tutorials on how to deploy popular applications. Let’s go through the official WordPress tutorial (non-production deployment is chosen for simplicity of this article, and you may deploy WordPress using helm charts).

Once done, there is one more thing to do. We will need to create an Ingress bound to a WordPress service since access to running applications in a Jelastic PaaS Kubernetes cluster is provided by a Traefik reverse proxy.

Create a file called wp-ingress.yaml with the following content:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

labels:

app: wordpress

name: wp

annotations:

kubernetes.io/ingress.class: traefik

ingress.kubernetes.io/secure-backends: "true"

traefik.frontend.rule.type: PathPrefixStrip

spec:

rules:

- http:

paths:

- path: /

backend:

serviceName: wordpress

servicePort: 80After the Ingress bound is created, a WordPress website is available at https://<your-environment-name>.<jelastic-paas-url>.

Great, we have a running WordPress instance in a Kubernetes cluster. Let’s create horizontal pod auto-scalers (HPA) for a WordPress deployment to make sure the service always responds despite high load. In a terminal, run:

kubectl autoscale deployment wordpress –cpu-percent=30 –min=1 –max=10 -n wp

Now, if a WordPress pod starts utilizing > 30% of CPU for all pods, the auto-scaler will modify the deployment to add more pod replicas, so that an internal load balancer routes requests to different pods. Of course, chosen values are for demo purposes only and can be adjusted based on your needs.

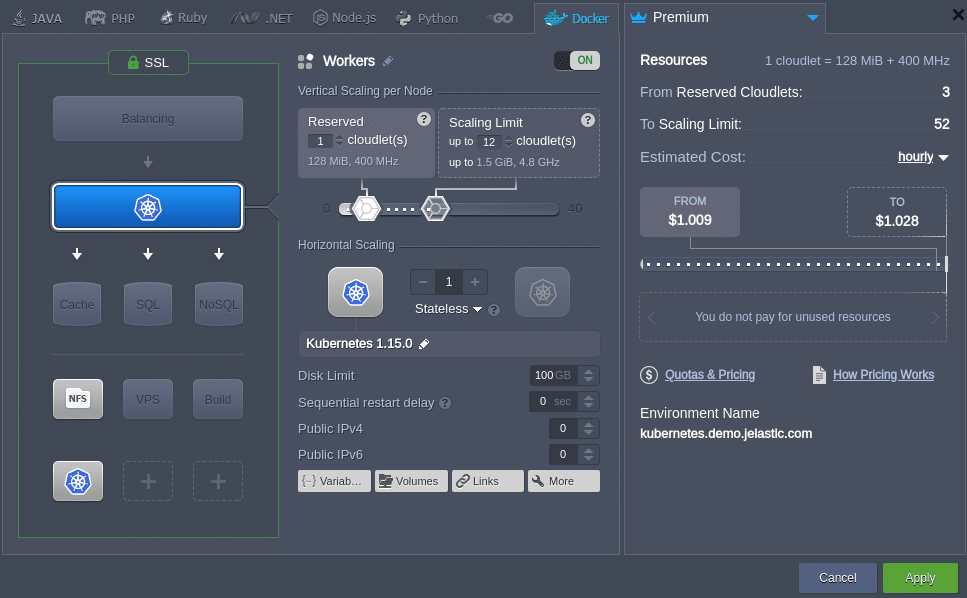

Next step is to review the vertical and horizontal scaling options of a Jelastic PaaS Kubernetes environment. There are two main goals here:

- Pay only for actually utilized resources;

- Make sure the Kubernetes cluster has a spare node when required.

To make this demo simpler (i.e., run out of RAM faster), let’s configure the node to use up to 1.5 GB RAM and 4.8 GHz CPU. Click Change Environment Topology and state Scaling Limit per node.

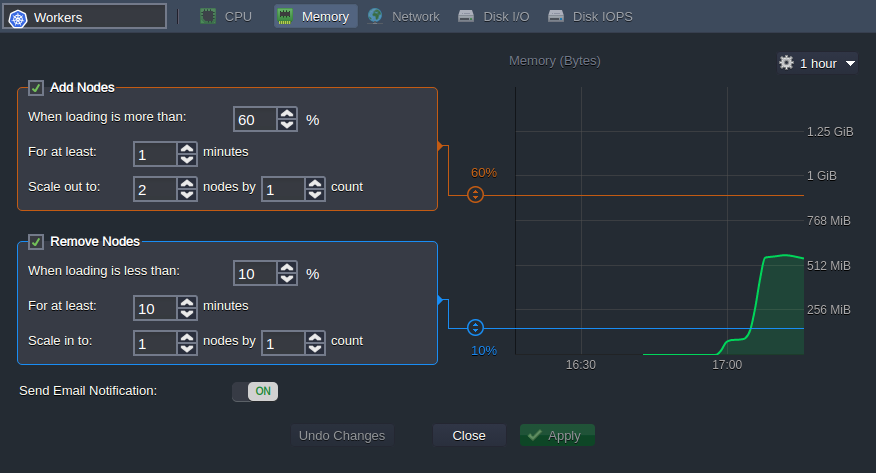

The configuration of automatic horizontal scaling is the final step. Similar to vertical scaling, values are set to be promptly triggered to suit purposes of this demo. Let’s instruct Jelastic PaaS when to add and remove Kubernetes nodes. Open Settings > Auto Horizontal Scaling and Add a set of required triggers.

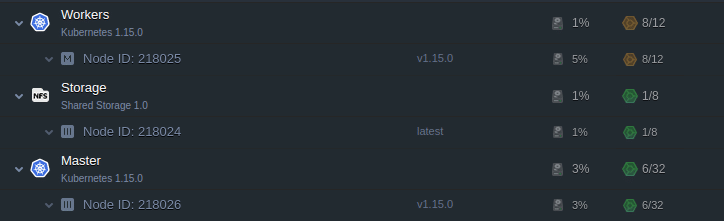

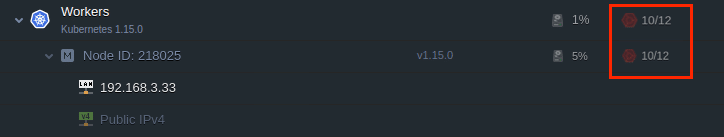

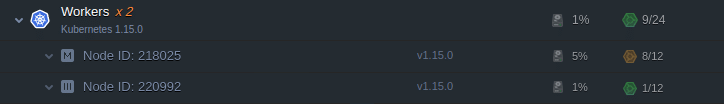

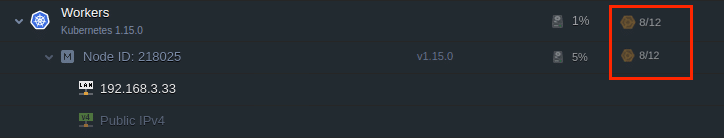

Let’s take a look at the memory consumption. The Kubernetes node (located in Workers) uses 8 cloudlets with 4 more cloudlets that can be dynamically added, i.e., there is some RAM available to schedule a few more pods.

It’s time to put some stress on the WordPress website. There are multiple ways to initiate HTTP GET requests. We will use the simplest – wget in a while-true loop executed within the WordPress pod itself (wordpress is a service name that will be resolved to an internal IP accessible from the Kubernetes cluster only):

while true; do wget -q -O- http://wordpress; done

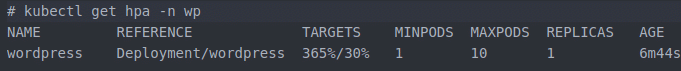

A few moments later, we can observe the following data from HPA:

kubectl get hpa -n wp

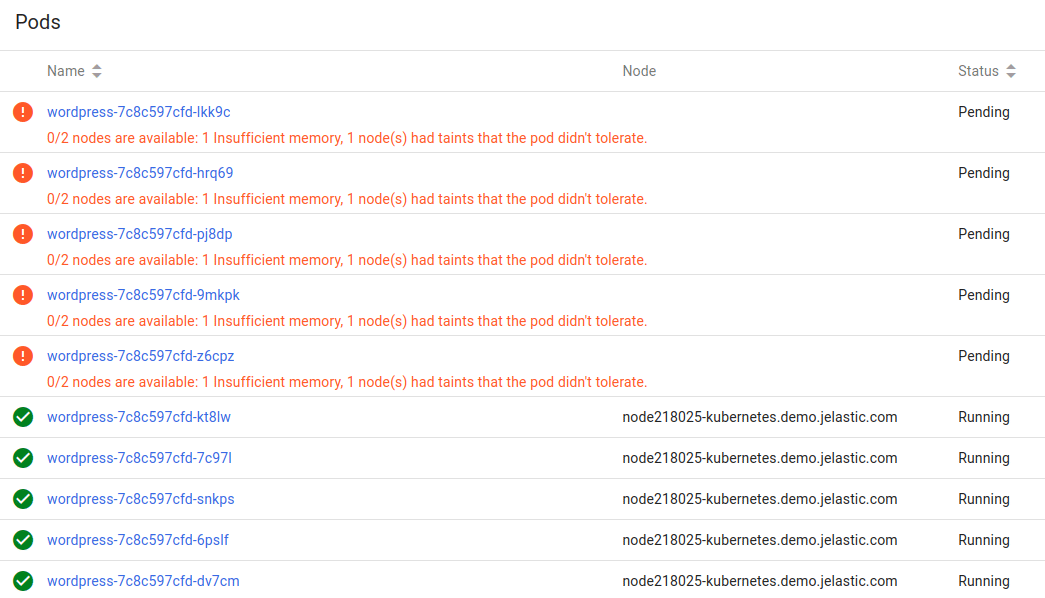

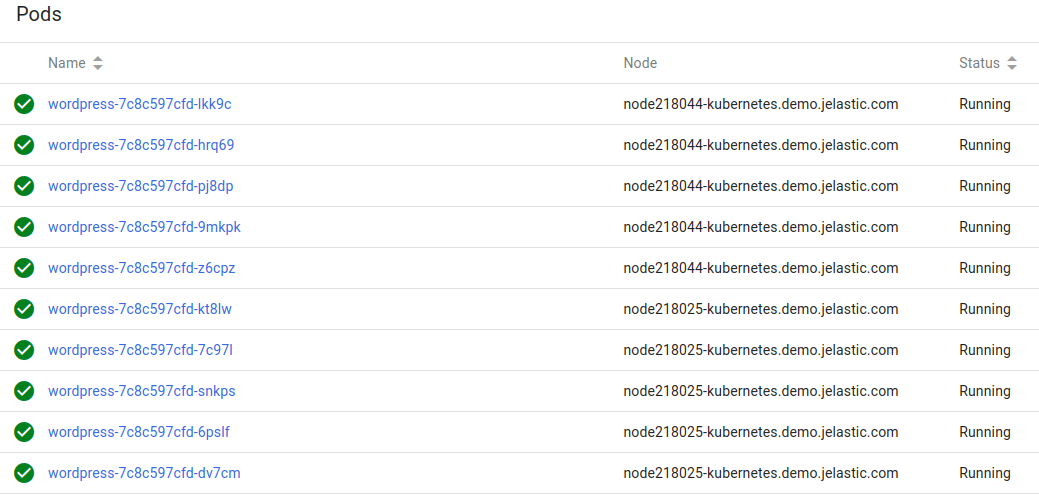

The Kubernetes auto-scaler has modified the WordPress deployment to add more replicas. Expectedly, the Kubernetes cluster does not have enough RAM, and therefore as the Kubernetes Dashboard suggests, the remaining 5 pods cannot be scheduled:

A few pods were able to start though, as Jelastic PaaS dynamically added cloudlets (RAM & CPU) to the node. However, the remaining 2 cloudlets were not enough for at least one more pod to start.

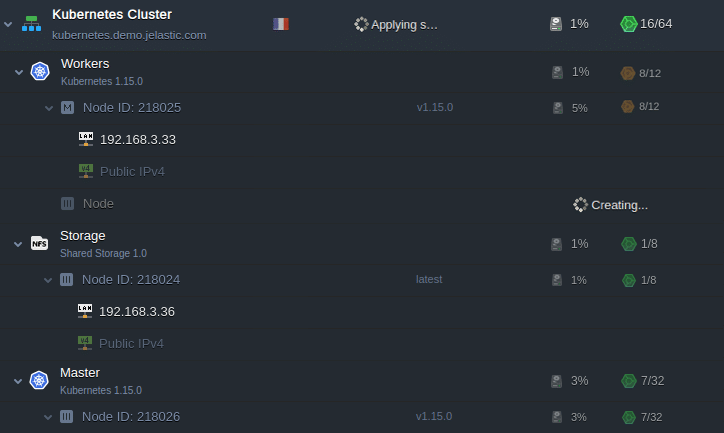

This is where the magic begins! Since we have configured automatic node auto scaling, Jelastic PaaS is now adding a new extra node.

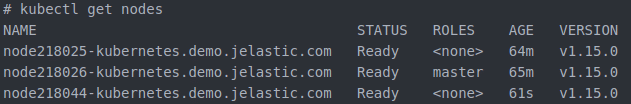

Let’s check if the new extra node is registered with the master by running the following command:

kubectl get nodes

A few moments later, the Kubernetes Dashboard shows:

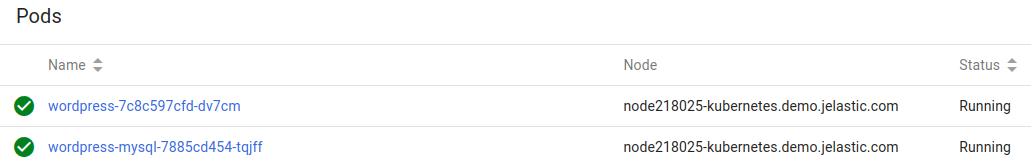

Fantastic! All pods have started which means WordPress can handle all those incoming requests that we have initiated a few minutes ago. Now, let’s abort this – wget in a while-true loop and wait for a minute. The replica count gets back to 1 again which means we do not need an additional node.

Memory utilization figures suggest that the node can be deleted.

And Jelastic PaaS indeed removes it in about one minute.

What have we just witnessed? Let’s recap. Creating HPA for a WordPress deployment initiates scheduling of more pod replicas to handle high load. It is up to the application administrator to configure triggers. Next, Jelastic PaaS dynamically allocates RAM within nodes. If you run out of RAM, a new node is added to the Kubernetes cluster. When the resource utilization gets back to normal, HPA sets the replica count back to 1, and Jelastic PaaS removes a node. When HPA scales up the deployment again, Jelastic PaaS reacts accordingly. No extra RAM is in use at any time! No downtime!

With so many scaling options, both on cluster (infrastructure) and application (deployment/pod) level, a Kubernetes cluster in Jelastic PaaS becomes a smart platform that either grows or shrinks according to your application workloads. Kubernetes even checks if your application is up and running, and redeploys it if necessary with zero downtime when the application is being updated with new images, which makes continuous delivery a reality, not just a buzzword.

Try out yourself for free at our Jelastic PaaS platform and share your feedback with us for further improvements!