Kubernetes (K8s) is one of the leading platforms for deployment and management of fault-tolerant containerized applications. It is used to build cloud-native, microservice applications, as well as enables companies to migrate existing projects into containers for more efficiency and resiliency. Kubernetes cluster can handle complex tasks of container orchestration, such as deployment, service discovery, rolling upgrades, self-healing, and security management.

Kubernetes (K8s) is one of the leading platforms for deployment and management of fault-tolerant containerized applications. It is used to build cloud-native, microservice applications, as well as enables companies to migrate existing projects into containers for more efficiency and resiliency. Kubernetes cluster can handle complex tasks of container orchestration, such as deployment, service discovery, rolling upgrades, self-healing, and security management.

Kubernetes is supported by Cloud Native Computing Foundation that helps to enable cloud portability without vendor lock-in. Kubernetes clusters can be deployed anywhere: on bare metal, public or private cloud.

At the same time, we don’t need to forget that spinning up a Kubernetes cluster on own servers from scratch is a complicated procedure. It requires a deep understanding of the cluster components and ways they should be interconnected, as well as time and skills for monitoring and troubleshooting. For more details refer to Kubernetes The Hard Way article.

In addition, managed Kubernetes services automate and ease a list of operations but there still remains the “right sizing” cloud problem. To get maximum efficiency you have to predict the size of a worker node and containers running inside. Otherwise, you may end up paying for large workers that are not fully loaded, or using small VM’s and playing around automatic horizontal scaling which may lead to additional complexity.

Jelastic PaaS has moved ahead solving a number of barriers and providing necessary functionality to get started with Kubernetes hosting easily while gaining maximum efficiency in terms of resource consumption:

- Complex cluster setup is fully automated and converted to “one click” inside an intuitive dashboard UI;

- Instant automated vertical scaling based on load changes;

- Fast automatic or manual horizontal scaling of worker nodes with integrated automatic discovery;

- Pay per use pricing model is unlocked for Kubernetes hosting, thus there is no need to overpay for reserved but unused resources;

- Jelastic PaaS shared storage is integrated with dynamic volume provisioner therefore data volumes used by applications are automatically placed to the storage drive and can be accessed by the user using SFTP/NFS or via an integrated file manager;

- No Public IP’s are required by default, a shared load balancer processes all incoming requests as a proxy server and is provided out of the box;

- Kubernetes clusters can be provisioned across multiple regions, clouds and on-premises with no fractions and differences in configurations and no vendor lock-in.

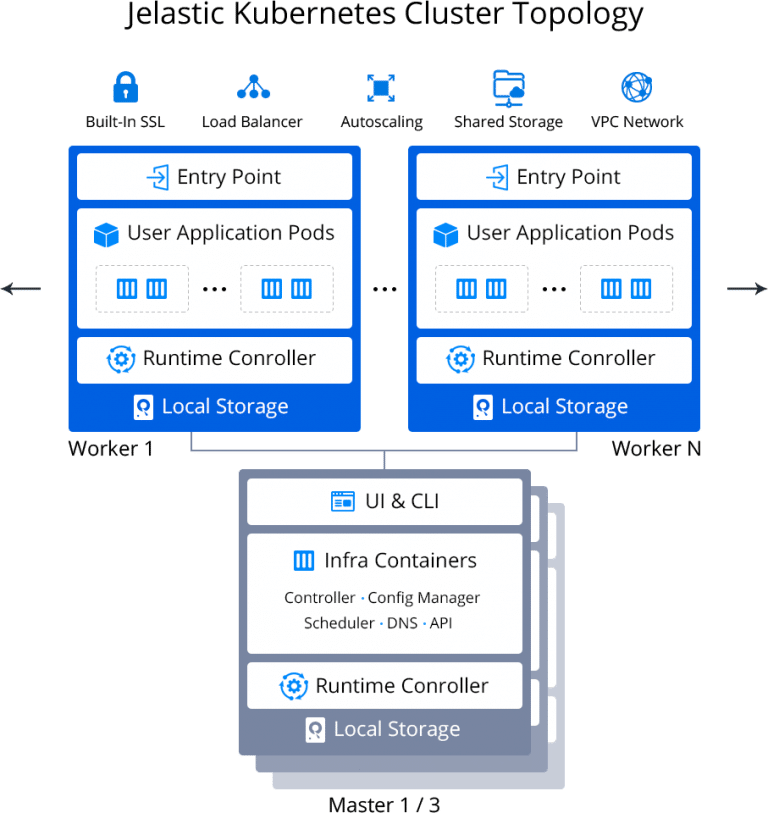

Jelastic PaaS supplies Kubernetes cluster with the following pre-installed components:

- Runtime controller containerd;

- CNI plugin (powered by Weave) for overlay network support;

- Traefik ingress controller for transferring HTTP/HTTPS requests to services;

- HELM package manager to auto-install pre-packed solutions from repositories;

- CoreDNS for internal names resolution;

- Dynamic provisioner of persistent volumes;

- Dedicated NFS storage;

- Metrics server for gathering stats;

- Built-in SSL for protecting ingress network;

- Web UI dashboard.

Explore the Kuberenetes cluster installation steps from the video or instructions below:

Kubernetes Cluster Installation

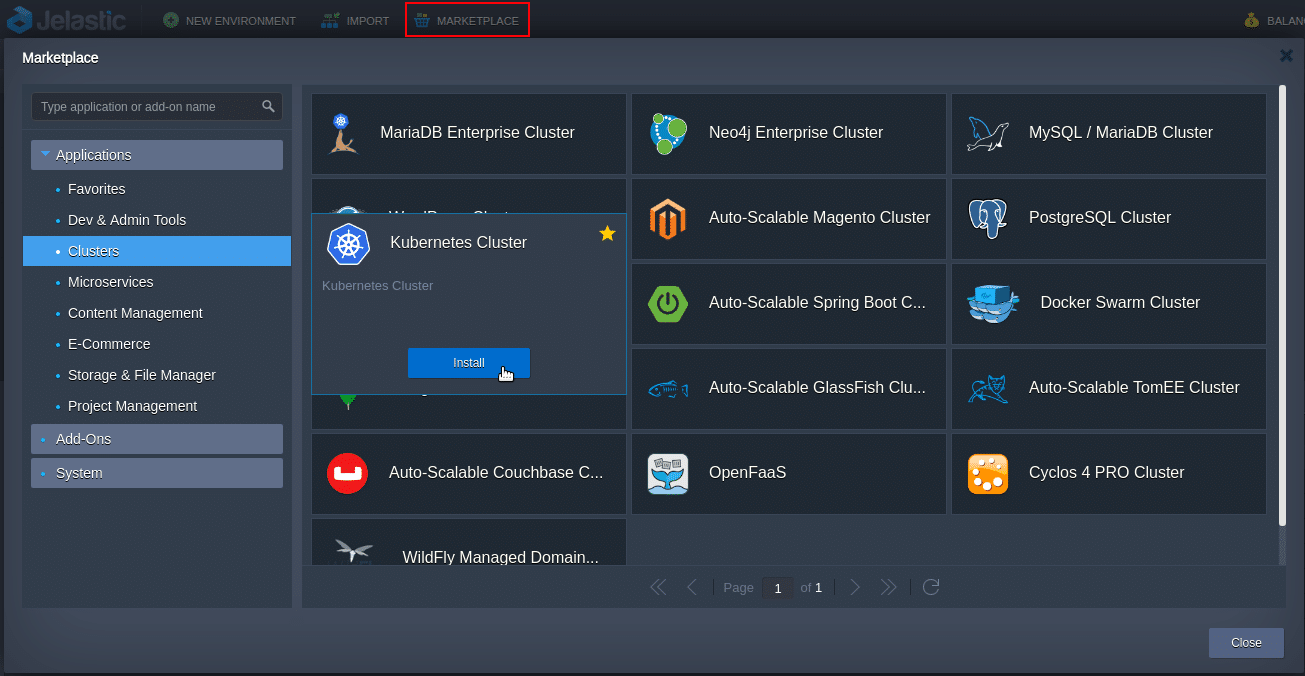

1. To get started, log in to the Jelastic PaaS dashboard, find the Kubernetes Cluster in the Marketplace and click Install.

Or import the manifest from GitHub using the link:

https://github.com/jelastic-jps/kubernetes/blob/master/manifest.jps

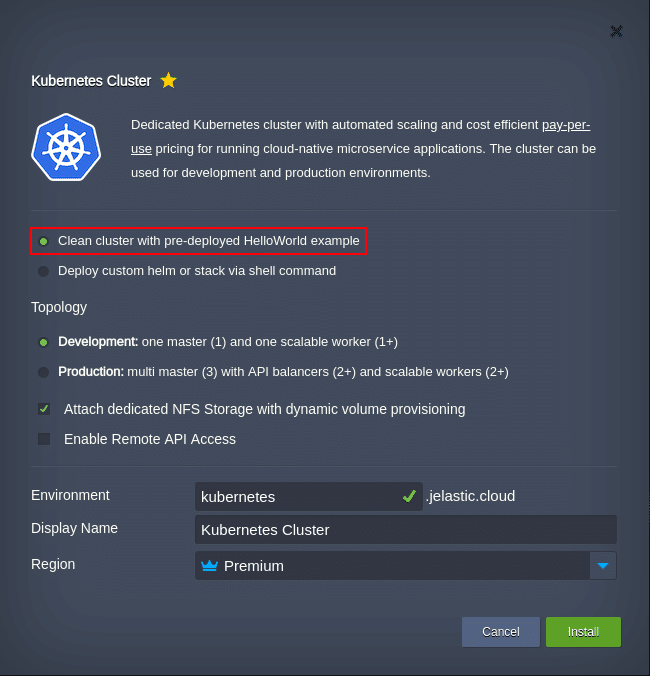

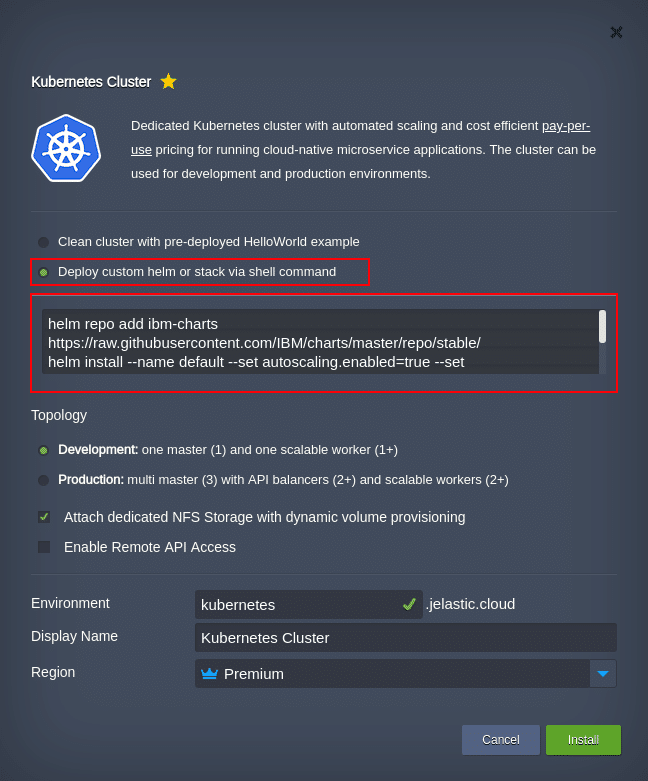

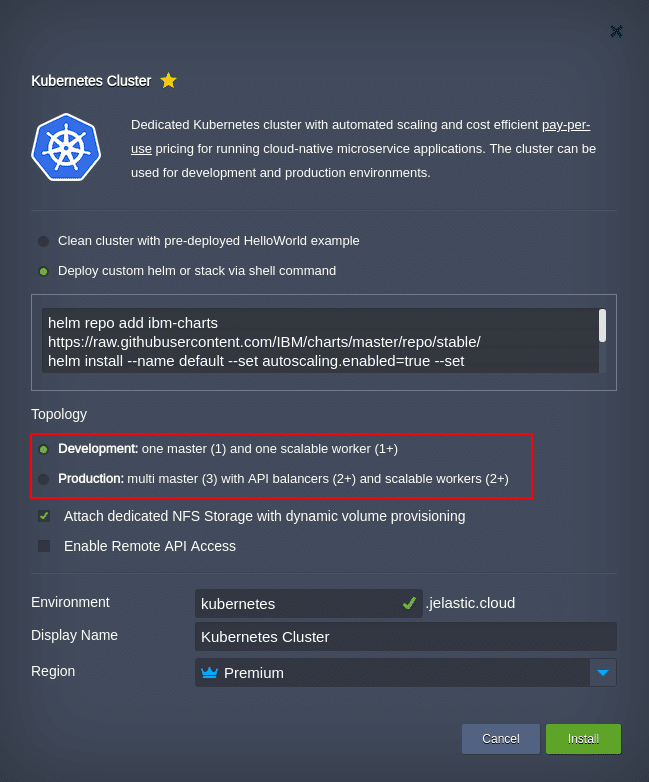

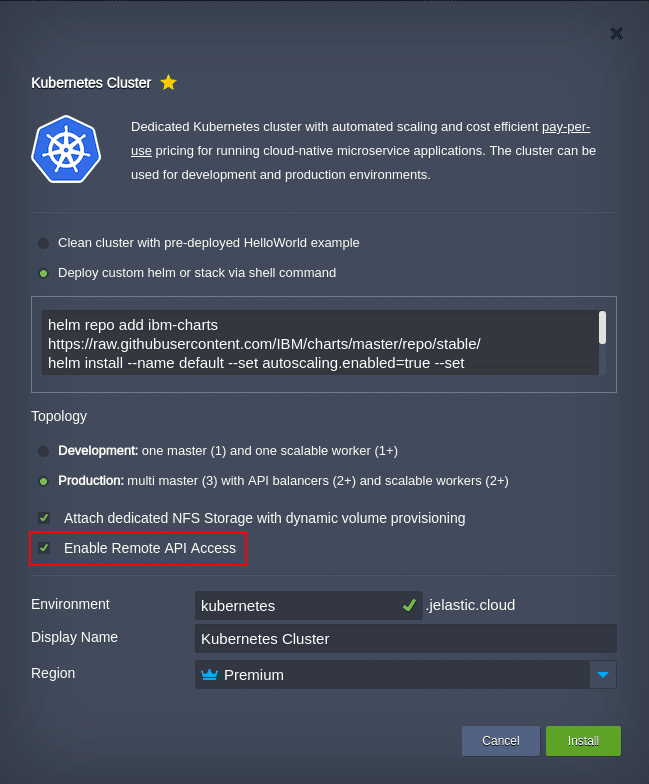

2. Сhoose the type of installation:

- Clean cluster with pre-deployed Hello World example;

- Deploy custom helm or stack via shell commands – type a list of commands to execute helm chart or other commands for a custom application deployment.

By default, you are offered to install Open Liberty application server runtime with a predefined set of commands:

- Adding repository;

helm repo add ibm-charts https://raw.githubusercontent.com/IBM/charts/master/repo/stable/ - Installing Open Liberty from this repository;

helm install –name default –set autoscaling.enabled=true –set autoscaling.minReplicas=2 ibm-charts/ibm-open-liberty –debug - Applying manifest.

kubectl apply -f https://raw.githubusercontent.com/jelastic-jps/kubernetes/master/addons/openliberty.yaml

3. Choose the required topology of the cluster. Two options are available:

- Development: one master (1) and one scalable worker (1+) – lightweight version for testing and development purposes;

- Production: multi master (3) with API balancers (2+) and scalable workers (2+) – cluster with pre-configured high availability for running applications in production.

Where:- Multi master (3) – three master nodes;

- API balancers (2+) – two or more load balancers for distributing incoming API requests;

- Scalable workers (2+) – two or more workers (Kubernetes nodes).

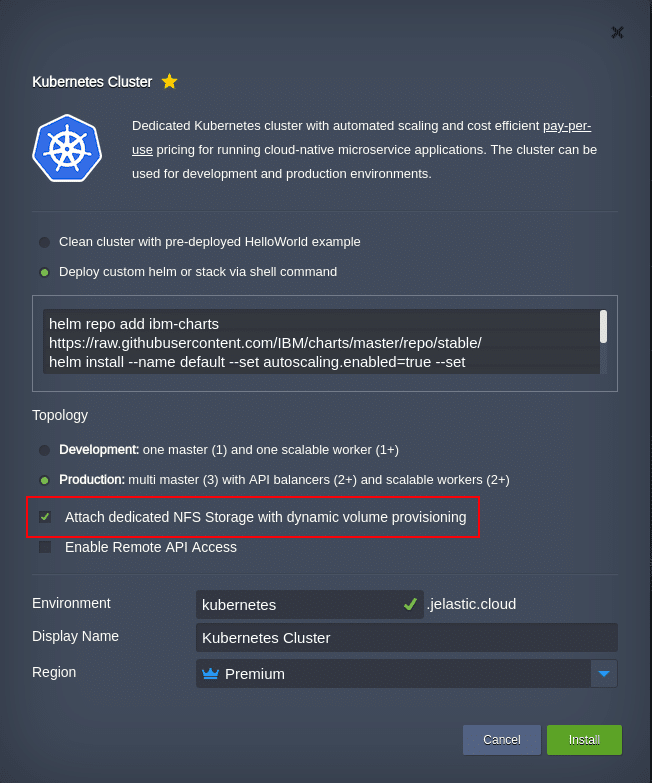

4. Attach dedicated NFS storage with dynamic volume provisioning.

By default, every node has its own filesystem with read-write permissions but for access from other containers or persisting data after re-deployments, data should be placed to a dedicated persistent data volume.

You can use the custom dynamic volume provisioner by specifying the required settings in your deployment yaml files.

Or, you can keep the pre-configured volume manager and NFS storage built-in to Jelastic PaaS Kubernetes cluster. As a result, the data volumes are going to be provisioned dynamically on demand and connected to the containers. A storage node can be accessed and managed using the UI file manager, SFTP or any NFS client.

5. In order to highlight all package features and peculiarities, this instruction initiates the installation of the Open Liberty application server runtime in a Production Kubernetes cluster topology with built-in NFS storage.

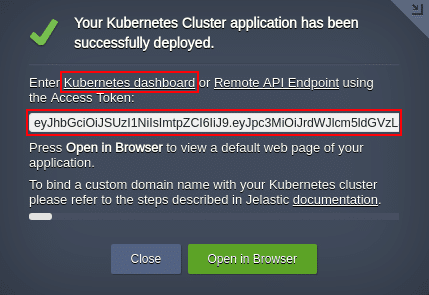

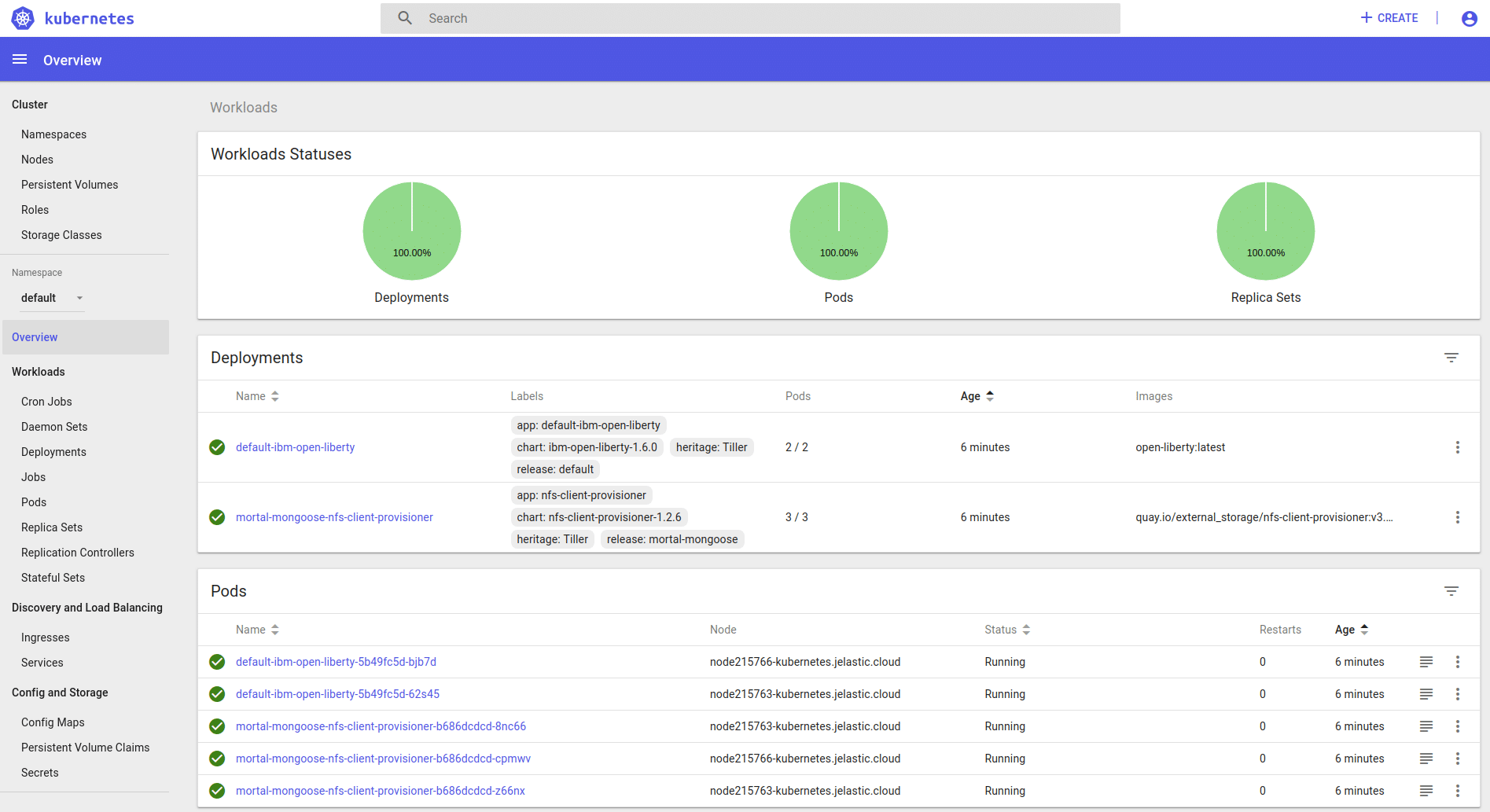

Click the Install button and wait a few minutes. Once the installation process is completed, the cluster topology looks as follows:

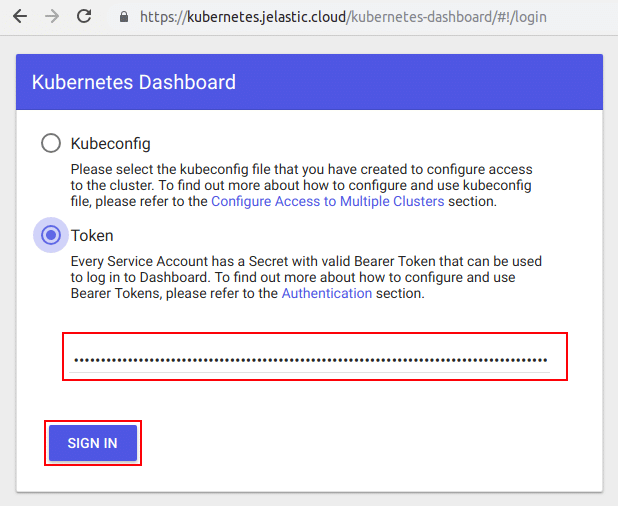

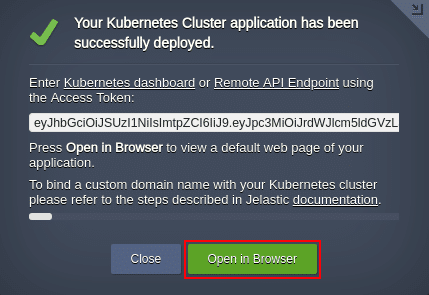

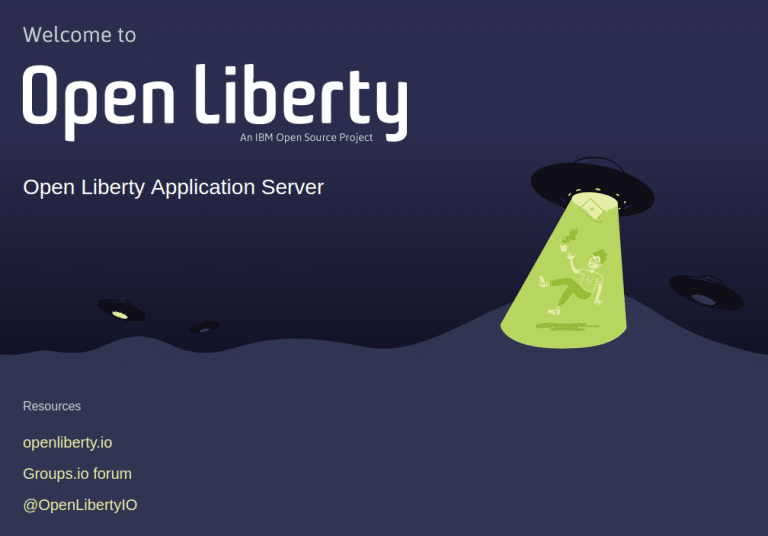

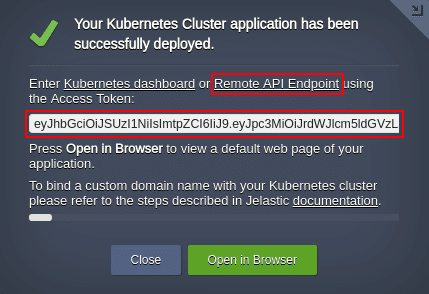

6. You can access the Kubernetes administration dashboard along with the Open Liberty application server welcome page from the successful installation window.

- Use the Access Token and follow the Kubernetes dashboard link to manage the Kubernetes cluster;

- Access the Open Liberty welcome page by pressing the Open in Browser button.

Kubernetes Cluster API Access

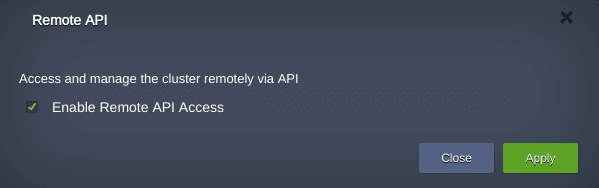

In order to access and manage the created Kubernetes cluster remotely using API, tick the Enable Remote API Access checkbox.

The Remote API Endpoint link and Access Token should be used to access the Kuberntes API server (Balancer or Master node).

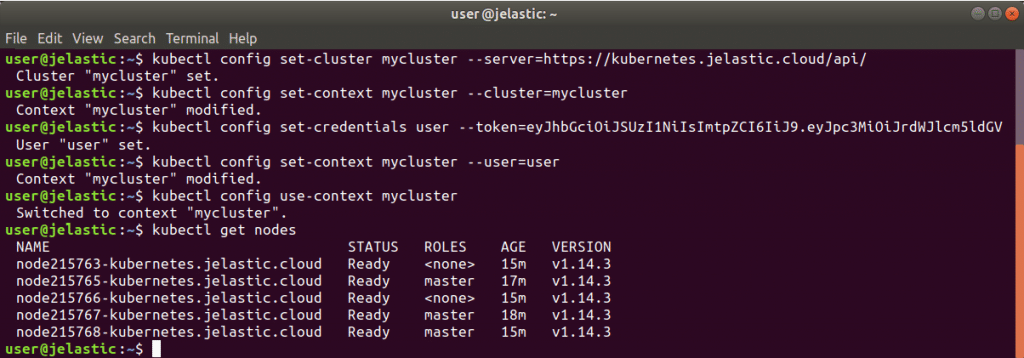

The best way to interact with the API server is using the Kubernetes command line tool kubectl:

- Install the kubectl utility on your local computer following the official guide (for this article, the installation for Ubuntu Linux was used);

- Create a local configuration for kubectl. To do this, open a terminal on your local computer and issue the following commands:

kubectl config set-cluster mycluster –server={API_URL}

kubectl config set-context mycluster –cluster=mycluster

kubectl config set-credentials user –token={TOKEN}

kubectl config set-context mycluster –user=user

kubectl config use-context mycluster

Where:- {API_URL} – Remote API Endpoint link;

- {TOKEN} – Access Token.

Now you can manage your Kubernetes cluster from a local computer following the official tutorial.

As an example, let’s take a look at the list of all available nodes in our cluster. Open a local terminal and issue a command using kubectl:

kubectl get nodes

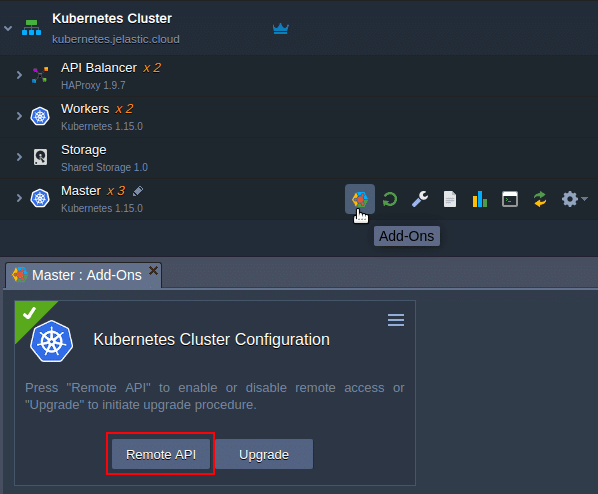

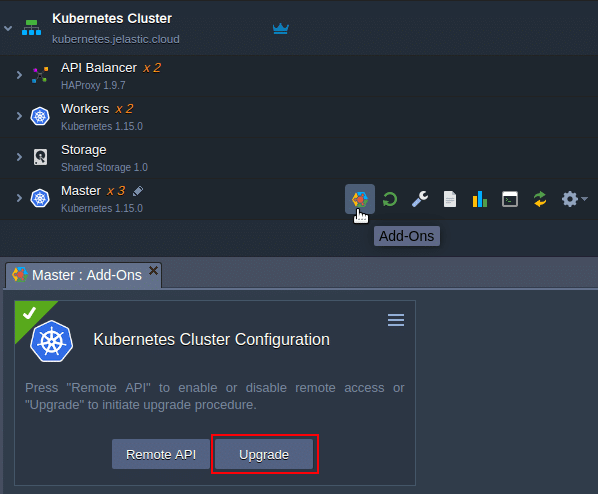

In order to enable/disable the API service after installation, use the Master node Configuration Add-On.

Kubernetes Cluster Upgrade

To keep the Kubernetes cluster software up-to-date, use the Configuration Add-On. Just click on the Upgrade button. The add-on checks whether a new version is available or not, and if so the new version will be installed. During the upgrade procedure all the nodes including masters and workers will be redeployed to new version one by one. All the existing data and settings will remain untouched. Keep in mind that the upgrade procedure is sequential between versions. If you perform an upgrade to the latest version from the version far away behind the latest one, you will have to run the upgrade procedure multiple times. The upgrade becomes available only if a new version becomes available and was globally published within Jelastic Paas.

In order to avoid downtime of your applications during redeployments, please consider using multiple replicas for your applications.

Kubernetes Cluster Statistics and Pay-per-Use Billing

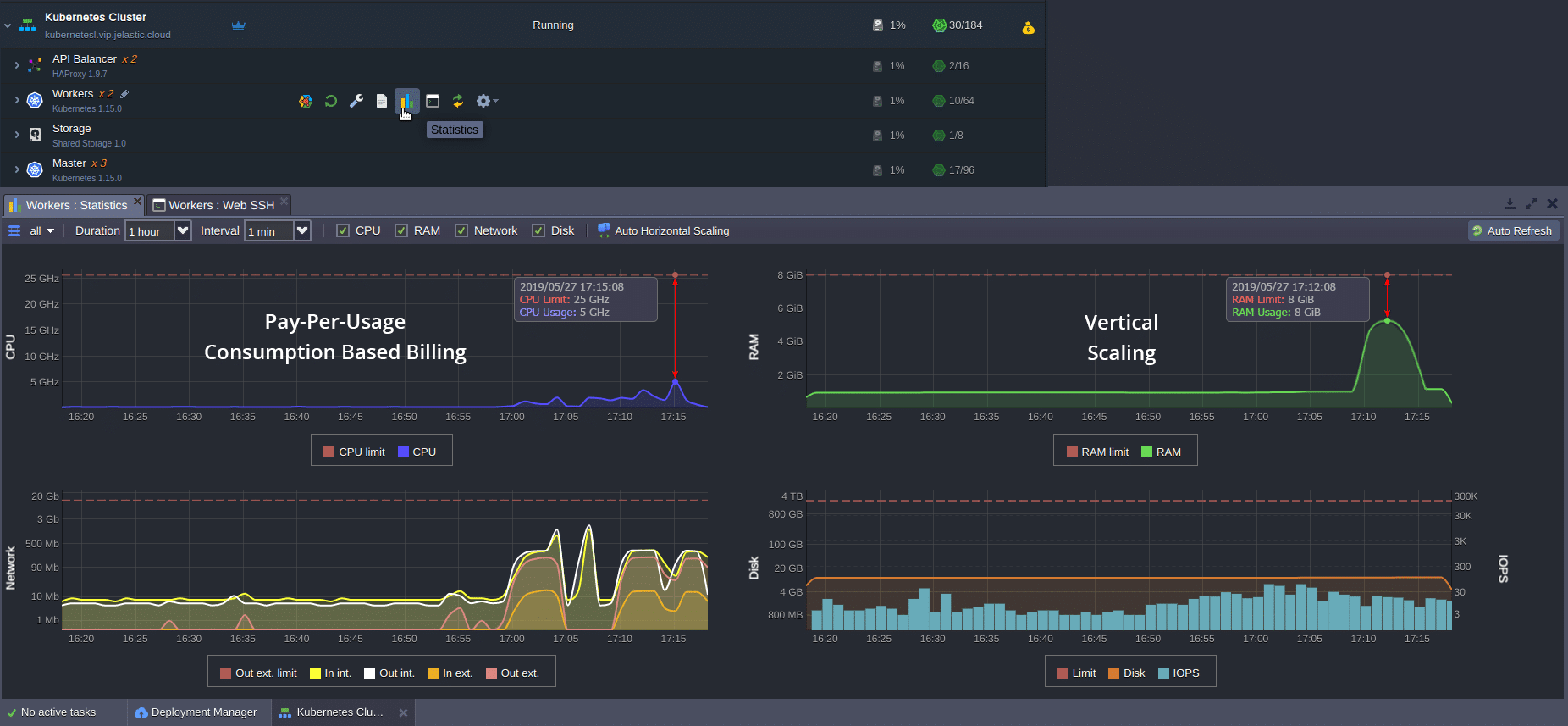

Jelastic Paas provides automatic vertical scaling for each worker and master node in the Kubernetes cluster. The required resources are allocated on demand based on the real-time load. As a result, there is no need to monitor the changes all the time as the platform makes it for you. In addition, there is a convenient way to check the current load across a group of nodes or each node separately. Just press the Statistics button next to the required layer or specific node.

Such highly-automated scaling and full containerization of Jelastic PaaS enables a billing model that is considered relatively new for cloud computing. Despite the novelty, this model has already gained a reputation of the most cost-effective “pay-per-use” or so-called “pay-as-you-use” approach. As a result, the payment for Kubernetes hosting within Jelastic PaaS is required for the actual used resources only with no need to overallocate, and thus solving the “right-sizing” problem inherent from the first generation of cloud computing pricing (“pay-per-limits” or so-called “pay-as-you-go” approach).

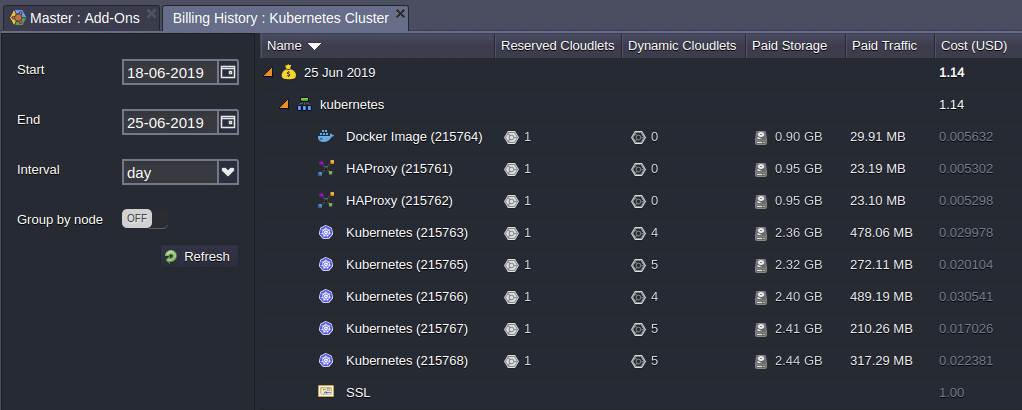

The whole billing process is transparent and can be tracked via the Jelastic PaaS dashboard (Balance > Billing History). Basically, the price is based on the number of real consumed resource unit cloudlet (128 MB + 400 MHz). Such granularity provides more flexibility in bill forming, as well as clarity in cloud expenditures.

Jelastic PaaS allows automatic vertical scaling of Kubernetes cluster nodes, automatic horizontal scaling with auto-discovery of newly added workers, management via an intuitive UI dashboard, as well as implementation of required CI/CD pipelines with Cloud Scripting and open API. Jelastic PaaS can provision clusters across multiple clouds and on-premises with no vendor lock-in and with full interoperability across the clouds. It allows to focus the valuable team resources for the development of applications and services instead of spending time on adjusting and supporting infrastructure and API differences of each Kubernetes cluster service implementation.

Try it out at our Jelastic PaaS platform and share your feedback with us for further improvements!